The world of AI and our technoscape is vast and ever-evolving. With so much information out there, it can be overwhelming to know where to start or how to stay updated. Therefore, I have decided to introduce a new regular feature on The Global Fair Space called My Five Finds🦉Inform. Inspire. Impact.

This feature will come in sequence after a grouping of my usual posts and will be dedicated to sharing a selection of five informative resources that I’ve come across—books, articles, videos, podcasts, helpful websites, and more—aimed at informing, inspiring, and impacting our understanding of AI and its multifaceted implications. Be assured that I will never recommend any resource I haven’t been completely been through myself, and will have read every book that I feature.

My goal is to provide a diverse mix of content that offers both depth and breadth, that caters to different levels of familiarity and interest in AI. These will be posts full of links at all levels peppered throughout the page, so make sure you click on a picture just in case it is linked🔗. Whether you’re an AI enthusiast, a professional in the field, or simply curious about the technology shaping our future, I hope at least one of these finds will enlighten and intrigue you.

Let’s get started!

☀️

1. Explore AI with IBM Technology (YouTube)

I found this fabulously massive trove of educational videos a couple of years ago. IBM’s ‘About’ blurb on its YouTube site reads: “Whether it’s AI, automation, cybersecurity, data science, DevOps, quantum computing or anything in between, we provide educational content on the biggest topics in tech. Subscribe to build your skillset, learn about new trends, and gain insights from IBM experts.”

For our purposes, the “Explore AI” section of @IBMTechnology offers a comprehensive look at the latest advancements in AI and showcases real-world applications, innovative research, and practical insights. The video groupings are as follows: AI Academy, AI Fundamentals, AI Ethics and Governance, Data and AI, Large Language Models and Chatbots, and Understanding AI Models. These videos are designed to educate on how AI is transforming various industries, from healthcare to finance, and inspire the next generation of tech enthusiasts and professionals. Happily, most of the videos are managebly snack-sized at around 10 minutes or less, so you can easily watch them in trainsit or while waiting in line!

I love this resource because whether you’re a seasoned expert or just beginning your journey in AI, IBM’s specialists provide valuable knowledge and spark curiosity. In addition, it’s really fun to watch the incredibly engaging instructors writing backwards in pretty colors behind the clear screen! I urge you to to explore the AI-specific videos as well as the other videos they have available. My favorite presenter, Martin Keen – Master Inventor IBM Cloud, just published a video on Sentiment Analysis this week! What are you waiting for?💡

☀️

2. You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place by Janelle Shane [Book]

Janelle Shane is a research scientist in optics and also writes and gives talks about AI. Her book, You Look Like a Thing and I Love you: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place is a delightful, thoughtful and often hilarious and exploration of artificial intelligence. Most importantly, it is absolutely accessible to all audiences, perfect for those of you curious about the often bizarro world of AI.

Through humorous and informative anecdotes, Janelle explains how AI systems work and shares their surprising successes and amusing failures. Her book is a great read because her style is incredibly engaging and crucially, she helps demystify complex topics for all audiences. Importantly, the book itself is small enough to throw in a backpack or tote bag to handily fish out in those times you are in the mood for some levity and learning. Here is a review of the book bt the Chair in Digital Learning at the University of Edinburgh, Professor Judy Robertson, so you don’t have to just take my word for it. But, be assured, this book is my kind of ultimate, inspirational beach read!⛱️

Also, please visit and subscribe to Janelle Shane’s blog, AI Weirdness, which further extends insights from the book with quirky experiments in AI and continues to provide a deeper understanding of how these technologies are impacting our daily lives.

☀️

3. Guardrails: Guiding Human Decisions in the Age of AI by Urs Gasser and Viktor Mayer-Schönberger [Book]

In an era defined by rapid technological advancements, “Guardrails” delves into how society can shape individual decisions amidst the complexity of AI and digital transformation. This insightful book introduces the concept of “guardrails”—societal norms and principles designed to guide human decision-making without undermining individual agency.

These last two research topics I am highlighting are more technical in nature. However, I will explain clearly why this research is important. For both these articles, I will also link to the article that explains the research.

One of the ideas presented in the book is that while AI can offer impressive solutions, it often relies on historical data and patterns. Historical data consists of records of past events, transactions, and activities that have been systematically collected and stored over time. This data is crucial for recognizing patterns, identifying trends, and making forecasts based on previous occurrences. It provides a wealth of insights that can guide decision-making and improve understanding. When utilized effectively, historical data can significantly enhance efficiency, satisfaction, and overall performance in various contexts.

However, this does mean that if we only use AI to make decisions, we may risk repeating past mistakes, including biases, instead of creating innovative and forward-thinking solutions. Drawing from the latest research in cognitive science, economics, and public policy, this research argues for a balanced approach that incorporates both technological tools and human-centered design principles.

Through compelling narratives and practical examples, Gasser and Mayer-Schönberger provide a roadmap for creating an equitable and just future, making Guardrails Guiding Human Decisions in the Age of AI a valuable read for policymakers, technologists, and anyone interested in the intersection of AI and societal impact. If you want to hear Viktor Mayer-Schönberger – Professor of Internet Governance and Regulation at the Oxford Internet Institute, University of Oxford – speak to the book, I found a great 28min interview with him on YouTube, as carried out by Boye & Co. 📺

☀️

🦉These last two topics I am highlighting are more technical in nature. However, I will explain clearly why this research is important. For both these articles, I will also link to the article that explains the research.

☀️

4. Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet– Anthropic [Research Paper]

Researchers at Anthropic – the folks who have brought us the LLM that some of you may have chatted with, Claude – have made significant progress in understanding the inner workings of large language models. These inner workings are often referred to as “black boxes” because of their complexity and the difficulty in explaining their ‘behavior’.

Using a technique called “dictionary learning,” they identified millions of features, which are patterns of neuron activations that correspond to specific concepts and topics. Dictionary learning is a method in large language models [LLMs]- like Anthropic’s Claude, Inflection’s Pi.ai or OpenAI’s ChatGPT – where the system learns a set of key features from input data, like words in a dictionary, similar to how you might make a list of key terms while studying something. This list helps the LLM understand and explain the data better, making it easier to figure out how the model is making its decisions. In other words, these key features help the model break down and understand complex data, making the model’s decisions more interpretable. It is these features that help explain how the AI processes information and generates responses.

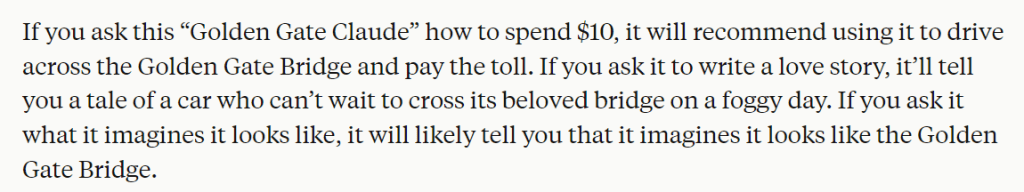

For instance, they found features that activate when the model talks about specific locations, scientific terms, or even abstract concepts such as gender bias. By manipulating these features, researchers could alter the model’s behavior, demonstrating that these features play a causal role in the AI’s responses. Hilariously, they found a feature that could make Claude fixate intensely on the Golden Gate Bridge in San Francisco, California. So, for a short time, you could have had a chat with “Golden Gate Claude” at claude.ai🤣

Unsurprisingly, they also found features related to: capabilities with misuse potential; different forms of bias; and potentially problematic AI behaviors such as power-seeking, manipulation, secrecy, and sycophancy. These findings highlight the importance of ongoing research and vigilance in understanding and mitigating the risks associated with advanced AI systems. By identifying these features, we can develop more robust guardrails to ensure AI systems act ethically and safely.

For those of you who would like to read to Anthropic’s article about this study, it is under their website’s Research tab here – Mapping the Mind of a Large Language Model (May 21, 2024). This research is a significant step towards making AI systems more interpretable and safer by allowing for better monitoring and control of their behaviors – we can’t control what we can’t measure. However, while understanding the full range of features and their implications remains a challenging and resource-intensive task, this development does open up a whole new dimension of experimentation on what AI can or can’t do.✨

☀️

5. Simulating Emotions With an Integrated Computational Model of Appraisal and Reinforcement Learning – Authors: Jiayi Eurus Zhang, Bernhard Hilpert, Joost Broekens, Jussi P. P. Jokinen [Research Paper]

Predicting how users feel while interacting with computers has been a long-standing goal. Traditional methods that rely solely on sensory data (like facial expressions or voice tones) often fall short because they don’t account for the underlying mental processes that influence emotions.

To tackle this, the researchers in Finland at the University of Jyväskylä, developed a new computational model that views emotions as evolving processes during interactions, rather than as fixed states. This model combines mechanisms that assess emotional significance with a rational approach using predictions from reinforcement learning. This study uniquely combines cognitive appraisal theory – which evaluates emotions based on their relevance to an individual’s goals – with reinforcement learning to predict human emotions.

Their experiments with human participants show that the model can successfully predict and explain emotions like happiness, boredom, and irritation during interactions. This approach allows for the creation of interactive systems that can adapt to users’ emotional states and serve to enhance their overall experience and engagement. Additionally, this work can help understand the connections between how we process rewards, learn, pursue goals, and evaluate situations emotionally. The article on the university’s site about this research is here .

Imagine user interfaces, virtual assistants, and educational tools that intuitively adapt to our emotional states and can enhance our interaction and engagement. This is a crucial step toward developing AI technologies – and the emerging field of Affective Computing – that are not only intelligent but also emotionally attuned to our needs. Designing AI that is sensitive to diverse emotional landscapes ensures that technology recognizes and respects the unique experiences of all users.

As an AI & Equity Strategist, I am constantly exploring future possibilities in which technology intersects with the human experience. This research marks a significant leap in our quest to create more empathetic and adaptive AI systems and opens up new possibilities for developing technology that is both human-centric and user-centric.

☀️

I hope this first post of My Five Finds🦉has informed, inspired, and had an impact in some way, shape, or form!

I would like to close with a quote by Janelle Shane from her book, as featured above:

#Fairness #AI #Equity #TheGlobalFAIRSpace #Equity #AIandEquity #policymaking #emergingtechnology #MyFiveFinds #InformInspireImpact #ArtificialIntelligence #TechEducation #AIEthics #AITrends #EmergingTechnology #DataScience #Innovation #FutureTech #IBMTechnology #ExploreAI #ResponsibleAI #BerkmanKleinCenter #Harvard #AIWeirdness #Anthropic #Claude #Jyväskylä #Jyväskylänyliopisto #UniofJyvaskyla #OIIOxford #AIResearch #AIApplications #AIGuardrails #UniversityofOxford #AIGovernance #JanelleShane # #AITransparency #AIInterpretability #AffectiveComputing #EmotionalAI #TechForGood

*All images not company, university logos, or book covers in this post generated by Natasha J. Stillman and ChatGPT-4o (DALLE-3)

Leave a comment